Automated API Testing Best Practices Including AI Testing

In modern software development, application programming interfaces (APIs) enable communication among software systems. Ensuring that APIs function correctly and efficiently is vital for an application’s overall stability and performance. Automated API testing is a powerful approach for achieving this, providing a way to consistently and reliably verify API functionality, performance, and security.

This article delves into the technical aspects of automated API testing, offering best practices and advanced techniques tailored for engineers. Topics such as setting up your environment, writing your first test, continuous integration, and advanced techniques like mocking and performance testing are explored.

Summary of key automated API testing concepts

Why prioritize API automated testing?

Automated API testing should be a priority in any robust testing strategy for two primary reasons:

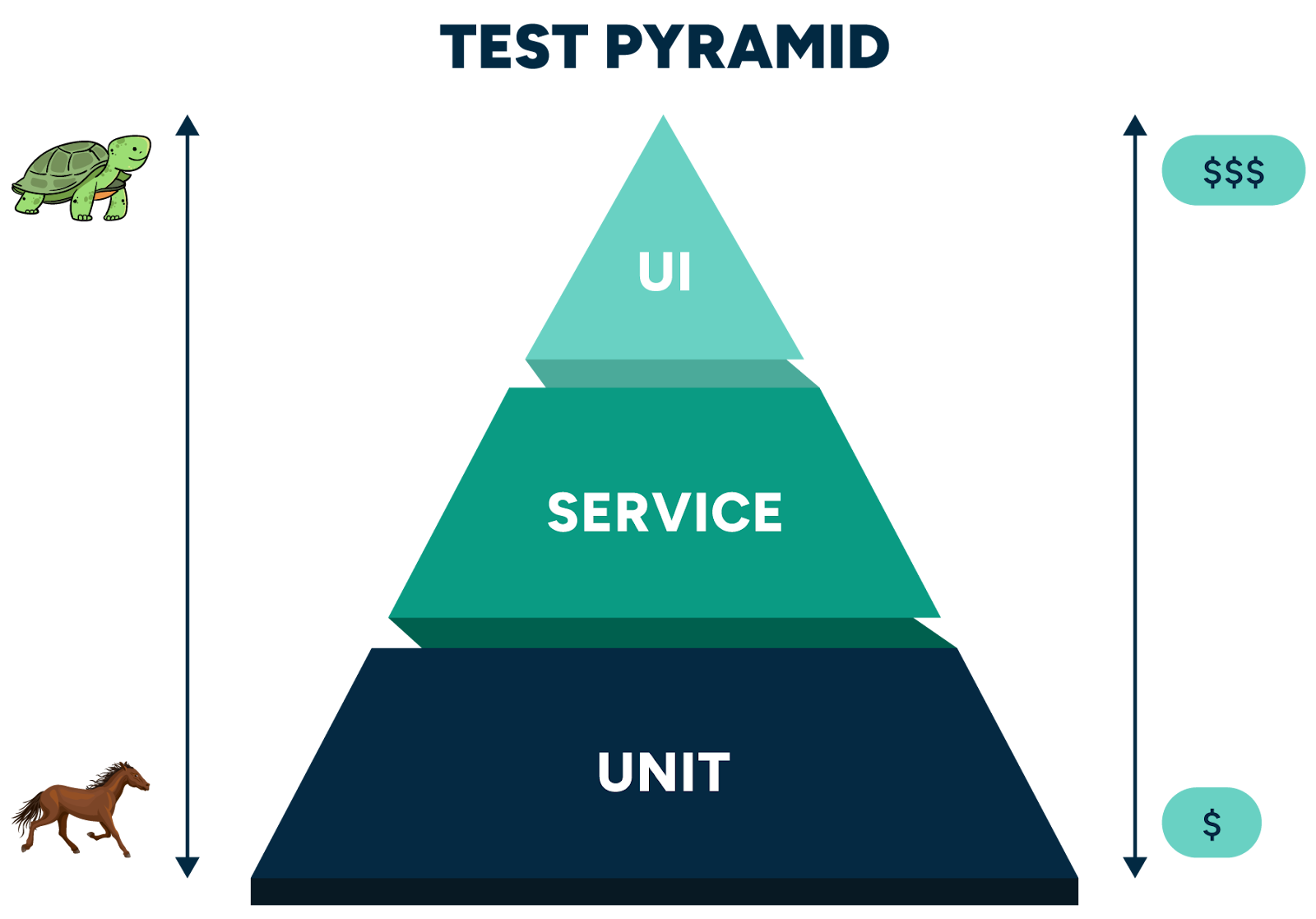

- Test pyramid and stability: API tests sit between unit tests and UI tests in the test pyramid. They are more stable than UI tests since they don’t rely on the user interface, which can change frequently. This stability ensures that your tests are less flaky and more reliable.

- Test execution time and reliability: API tests execute faster than UI tests because they don’t involve rendering web pages or interacting with the graphical elements of the user interface. This speed and reliability are crucial for maintaining a fast and efficient development workflow, allowing for rapid feedback and continuous integration.

Teams often overlook API testing because of the required maintenance and engineering effort. These teams can offset these challenges using a low-code, maintenance-free solution that ensures efficient and reliable API testing. Teams can create comprehensive API test suites without manual scripting using AI-powered test generation by monitoring live transactions. This approach significantly reduces the time and effort required for thorough API coverage while maintaining the stability and reliability benefits of API testing.

Setting up your environment

Choosing tools and frameworks

Selecting the right tools and frameworks is a big part of effective API testing. Here’s an overview of some popular tools:

- Postman: An intuitive platform for developing, testing, and documenting APIs

- Pytest: A versatile testing framework for Python, suitable for API testing with plugins like pytest-requests

- RestAssured: A Java library for testing RESTful APIs

- SoapUI: A tool for the functional testing of SOAP and REST web services

- Karate: A domain-specific language for API testing that combines API test automation, mocks, and performance testing into a single framework

{{banner-large-dark="/banners"}}

Manual API testing

Many teams start by manually writing unit tests for their applications. A programming language such as Python might be used through a code library like Pytest. The basic process might look like this:

- Install a programming language.

- Install a package manager.

- Install code and app dependencies.

- Configure a testing environment.

- Set up a maintainable project structure.

Typically, engineers manually update these tests daily. As new features are added, a real-world system can accumulate tens of thousands of such test cases over a period of years. Old tests must be updated to stay current with new system requirements, and test suite maintenance becomes a part of an engineering team’s daily workload.

Example code

Below is a simple example of an API test of a user endpoint using Pytest and Requests. You may see many of these tests in a traditional testing suite.

import requests

def test_get_users():

# Setup

url = "https://jsonplaceholder.typicode.com/users"

# Execution

response = requests.get(url)

# Validation

assert response.status_code == 200

assert len(response.json()) == 10While this example demonstrates a manual approach to API testing, AI-powered test automation streamlines this process significantly. With AI testing, you don’t need to write individual test scripts—the platform’s AI analyzes your API structure and automatically generates comprehensive test suites. This approach reduces the risk of overlooking critical test scenarios.

API testing best practices

Structure tests well

A well-structured test is easy to read, maintain, and extend. Here’s the basic structure of an API test:

- Setup: Prepare any necessary preconditions or data.

- Execution: Make the API call.

- Validation: Verify that the response matches the expected results.

Other considerations include external API access (and associated costs or issues in test environments), data consistency between test runs, and sufficient randomization of test data.

Keep tests organized

Effective organization of test cases and test suites helps manage complexity as those test suites grow. For instance, grouping related tests together can enhance readability and maintainability. This approach allows testers to locate and debug issues more efficiently, ensuring that your testing efforts remain streamlined and effective as a project scales.

Common strategies for test organization include grouping related tests together in directories and using descriptive names for test files and functions to indicate their purposes.

Here’s an example directory structure:

tests/

├── users/

│ ├── test_get_users.py

│ └── test_create_user.py

├── posts/

│ ├── test_get_posts.py

│ └── test_create_post.pyAI-driven testing tools can simplify test organization. In this case, the platform automatically categorizes and groups related tests based on API endpoints, functionality, and dependencies. This intelligent organization reduces the manual effort required to maintain a well-structured test suite, especially as it grows in complexity. Its intuitive interface allows teams to easily navigate and manage their test cases, regardless of the size of the test suite.

{{banner-small-1="/banners"}}

Ensure reusability and maintainability

Writing reusable test components and fixtures enhances the maintainability of a test suite by reducing duplication and promoting consistency across tests.

For example, creating reusable functions for common test operations, such as authentication or data setup, streamlines test development and ensures that updates or modifications can be applied uniformly throughout your test suite. This approach not only improves efficiency but also enhances the reliability and scalability of testing efforts as an application evolves.

Here are some specific techniques to consider:

- Fixtures: Use predefined sets of test data—commonly known as fixtures—to set up and tear down common test data or states.

- Modular test functions: Break down tests into smaller, reusable functions.

Here’s an example of using a Pytest fixture to simulate a get request:

import pytest

import requests

@pytest.fixture

def base_url():

return "https://jsonplaceholder.typicode.com"

def test_get_users(base_url):

response = requests.get(f"{base_url}/users")

assert response.status_code == 200scripts. Its AI-driven approach learns from your API structure and usage patterns, creating modular and maintainable tests that adapt to changes in your API. This reduces the need for constant manual updates and keeps your test suite efficient and effective as your application evolves.

Implement error handling and reporting

Error handling and reporting are crucial components of an effective engineering strategy. They allow teams to quickly identify, diagnose, and resolve issues in real time while providing valuable insights into system performance and reliability over time.

Here are some methods of handling system errors:

- Exception handling: Assertions are conditions that must be true for a test to pass. Including meaningful error messages in your assertions helps identify and debug issues quickly. In code, this may take the form of “catching” errors in the form of exceptions. Programming techniques such as try-catch blocks are commonly used to handle expected exceptions during test execution. This prevents tests from crashing abruptly and allows for more controlled error management.

Here’s an example of catching an error using Python:

try:

run_some_code()

except SpecificException as e:

logging.error(f"Specific error occurred: {e}")

except Exception as e:

logging.error(f"Unexpected error: {e}")- Logging: Where applicable, implement logging into app builds, CI/CD runs, and test suites. Log benchmarks, test run times, and server response times. Use different log levels (DEBUG, INFO, WARNING, ERROR) to categorize messages appropriately.

import logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

logger.info("Starting test suite")

logger.debug("Detailed debug information")

logger.warning("Potential issue detected")

logger.error("Critical error occurred")- Real-time monitoring: Integrate your test automation with monitoring tools like Grafana or Kibana to visualize test results and system performance metrics in real-time dashboards.

- Trend analysis: Implement a system to track and analyze test results over time. This can help identify:

- Recurring issues

- Performance degradation

- Failure-prone areas of a system

AI testing enhances error handling and reporting by providing detailed, actionable insights from your API tests. Its AI-powered analysis can help identify patterns in failures and potential root causes, enabling teams to quickly diagnose and resolve issues. The platform’s comprehensive reporting features also support trend analysis, helping teams track test results over time and identify areas for improvement in their API performance and reliability.

Continuous integration and deployment

Integrating API tests into your CI/CD pipeline ensures that they run automatically with every code change, providing immediate feedback and preventing regressions.

Here’s a simple GitHub Actions workflow for running Pytest:

name: API Tests

on: [push, pull_request]

jobs:

test:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v2

- name: Set up Python

uses: actions/setup-python@v2

with:

python-version: '3.8'

- name: Install dependencies

run: pip install -r requirements.txt

- name: Run tests

run: pytest tests/Automatically running tests on each commit helps catch issues early. Ways to configure automatic test runs include:

- Configuration files: Use configuration files like .github/workflows/test.yml for GitHub Actions.

- Scripts: Include scripts for setting up the environment and executing tests.

Modern API testing tools integrate seamlessly with popular CI/CD tools, allowing for effortless incorporation of API tests into your existing pipelines. Its cloud-based infrastructure ensures that tests can be run at scale without putting strain on your local resources, facilitating faster feedback cycles and more efficient development processes. AI-powered test generation also adapts to changes in APIs, ensuring that CI/CD pipelines always run updated, relevant tests.

Advanced techniques

Mocking and virtualization

Mocking and virtualization are powerful techniques that enable testing without relying on actual APIs or external dependencies. These methods allow engineering teams to:

- Simulate API responses for isolated testing

- Test edge cases and error scenarios that are difficult to reproduce with real APIs

- Develop and test against APIs that are still in development

Traditional tools for API mocking and virtualization include WireMock and MockServer. These tools are widely used but can require significant setup and maintenance effort, especially for complex scenarios.

Qualiti’s platform addresses these challenges by offering built-in support for mocking and virtualization, often with more user-friendly interfaces and integration capabilities. This integrated approach allows teams to:

- Easily create and manage mock API responses within the same environment as their tests

- Automatically generate mock responses based on API specifications or recorded traffic using advanced AI technology

- Seamlessly switch between mocked and real API endpoints for comprehensive testing scenarios

By leveraging mocking capabilities, teams can achieve more thorough testing without the need for additional tools or complex setups.

Performance testing

Performance testing evaluates the speed, responsiveness, and stability of an API under various conditions to ensure that it meets performance benchmarks. Incorporating performance testing ensures that your APIs can handle expected loads.

Locust and K6 are potential tools for API performance testing. Here’s a basic configuration for a Locust test:

from locust import HttpUser, TaskSet, task

class UserBehavior(TaskSet):

@task(1)

def get_users(self):

self.client.get("/users")

class WebsiteUser(HttpUser):

tasks = [UserBehavior]

min_wait = 1000

max_wait = 2000AI testing takes a more integrated and user-friendly approach to performance testing:

- AI-driven test generation: AI can automatically create performance tests based on your API structure and usage patterns. This ensures comprehensive coverage of critical performance scenarios without the need for manual script writing. You can learn more about Qualiti’s approach on this page.

- Scalable cloud infrastructure: By leveraging cloud resources, it can simulate high loads and concurrent users more easily than traditional local testing setups.

- Integrated analysis: Performance test results are analyzed alongside functional test results, providing a holistic view of API health and performance.

- Adaptive testing: AI can dynamically adjust test scenarios based on real-world usage patterns so that performance tests remain relevant as your API evolves.

Combining these advanced techniques allows teams to address functional, performance, and reliability aspects in a single environment.

Reporting and monitoring

Generating comprehensive test reports

Dedicated reporting tools enable engineering teams to generate detailed, visually appealing test reports. These reports can include test case summaries, pass/fail statistics, execution times, or system details.

Tracking test results and identifying trends

Monitoring test results over time helps identify patterns and potential problem areas. Review test reports regularly and look for trends that indicate underlying issues.

Monitoring API performance and health in production

Without production monitoring, companies would never know when applications crash, or networks go down. Engineering teams need tooling to respond to events in real time.

Several tools can help you monitor the performance and health of your APIs in production:

- APM tools: Application performance management tools like New Relic, Datadog, and Dynatrace

- Log monitoring: Tools like the ELK Stack (Elasticsearch, Logstash, and Kibana) and Splunk

- Synthetic monitoring: Services like Pingdom and Uptrends to simulate user interactions, letting you monitor API performance and availability

AI testing offers advanced reporting and monitoring features that go beyond traditional tools. Its AI-powered analytics can identify subtle patterns and potential issues in API performance that might be missed by conventional monitoring approaches. The platform provides real-time dashboards and alerts, enabling teams to proactively address API health and performance issues before they impact users. Its comprehensive reports include detailed test case summaries, pass/fail statistics, execution times, and trend analysis, all presented in an easily digestible format for both technical and non-technical stakeholders.

{{banner-small-2="/banners"}}

Conclusion

Automated API testing is crucial for ensuring modern software applications' reliability, performance, and security. By following best practices for organizing, maintaining, and integrating tests into your CI/CD pipeline, your engineering team can create a robust testing framework that scales with your application. Advanced techniques like mocking and performance testing further enhance the team’s ability to deliver high-quality software.

However, implementing and maintaining a comprehensive API testing strategy can be challenging and time-consuming. This is where Qualiti’s platform offers advantages, such as:

- AI-powered test generation: Its advanced AI algorithms can automatically create thorough API test cases, dramatically reducing the time and effort required for test creation and maintenance.

- Low-code environment: With its intuitive interface, even team members without extensive coding experience can contribute to API testing efforts, broadening your testing capabilities.

- Maintenance-free solution: As your APIs evolve, AI adapts your test suite automatically, eliminating the need for constant manual updates and ensuring that your tests remain relevant and effective.

- Scalability and performance: By leveraging cloud infrastructure, it enables you to run extensive API tests at scale without straining your local resources, ensuring faster feedback and more efficient development cycles.

- Comprehensive reporting: It provides detailed, actionable insights from your API tests, helping you quickly identify and resolve issues before they impact your users.

By leveraging AI testing solutions, engineering teams can overcome common API testing challenges and achieve a new level of efficiency and effectiveness in their testing processes. This allows these teams to focus on innovation and delivering value rather than getting bogged down in test maintenance and script writing.