The Guide to Playwright Test Automation

Playwright is an open-source test automation framework for testing across many browsers, devices, and platforms. Test automation - in which redundant parts of the testing process are handled by workflows or triggered events - forms the backbone of an effective app testing strategy. With Playwright, test coverage can be created across hundreds of devices, browsers, and environments.

This article explains implementing a comprehensive end-to-end testing strategy with Playwright test automation. With clear examples, you'll discover how to optimize your testing processes and deliver higher-quality software. By the end of the article, you’ll be equipped to create your own test suite and automate code quality checks across your app ecosystem. We will also see how the testing process can be further refined using Qualiti, an AI-powered test suite management platform.

Summary of key Playwright test automation strategies

Create maintainable tests

Write clear and concise tests using descriptive names for tests and variables to enhance readability.

- Follow consistent naming conventions to improve maintainability.

- Ensure each test functions independently, preventing unintended dependencies that can complicate debugging.

- Avoid overly complex logic or convoluted assertions to maintain test clarity and reduce the likelihood of errors.

Avoid cryptic or ambiguous output. Ensure assertions are meaningful and specific. For example, specify the expected value instead of asserting that a value is not null.

Evaluate whether team members can easily understand the error messages from a failed test. If not, consider refining the error messages or providing additional context to improve clarity.

Follow the DRY Principle

DRY (Don’t Repeat Yourself) testing aims to eliminate duplication in test code and focus on the essential aspects of system behavior. This reduces maintenance effort and improves test readability.

Let’s examine a scenario where you need to test the login page of a web app using a username and password (both valid and invalid). Each test case in the example below repeats the same steps for navigating to the login page, filling in credentials, clicking the login button, and asserting the expected message.

const { test, expect } = require('@playwright/test');

test('test_login_success', async ({ page }) => {

await page.goto('https://app.qualiti.ai/auth/login');

await page.fill('#username', 'valid_user');

await page.fill('#password', 'valid_password');

await page.click('#login_button');

await expect(page).toContainText('Welcome, valid_user');

});

test('test_login_failure_invalid_username', async ({ page }) => {

await page.goto('https://app.qualiti.ai/auth/login');

await page.fill('#username', 'invalid_user');

await page.fill('#password', 'valid_password');

await page.click('#login_button');

await expect(page).toContainText('Invalid username');

});

test('test_login_failure_invalid_password', async ({ page }) => {

await page.goto('https://app.qualiti.ai/auth/login');

await page.fill('#username', 'valid_user');

await page.fill('#password', 'invalid_password');

await page.click('#login_button');

await expect(page).toContainText('Invalid password');

});

The test_login_success is a test case that takes page as a parameter. This page object represents a Playwright-controlled browser instance where the test will be executed. The same code is then modified into two other variations (invalid username and invalid password) to test all cases. This approach makes the code redundant and difficult to maintain. If the login process were to change, all three tests would need to be updated.

Let’s modify the above code to improve maintainability and readability. You can do that using a function that takes arguments for username, password, and expected message. Then, call that function in every test case where it’s needed.

The difference lies in how the test function is defined. In the previous example, the test function was included within the test case block itself. In the example below, it is predefined to make tests more concise and modular.

const { test, expect } = require('@playwright/test');

async function testLogin(page, username, password, expectedMessage) {

await page.goto('https://app.qualiti.ai/auth/login');

await page.fill('#username', username);

await page.fill('#password', password);

await page.click('#login_button');

await expect(page).toContainText(expectedMessage);

}

test('test_login_success', async ({ page }) => {

await testLogin(page, 'valid_user', 'valid_password', 'Welcome, valid_user');

});

test('test_login_failure_invalid_username', async ({ page }) => {

await testLogin(page, 'invalid_user', 'valid_password', 'Invalid username');

});

test('test_login_failure_invalid_password', async ({ page }) => {

await testLogin(page, 'valid_user', 'invalid_password', 'Invalid password');

});

{{banner-large-dark-2="/banners"}}

Playwright fixtures

Fixtures in Playwright are pre-defined data sets, objects, or environments set up before a test case runs and cleaned up afterward. They provide a controlled environment, isolating tests from external factors to efficiently manage test data.

The table below gives an overview of the built-in fixtures within Playwright.

Let’s use the page fixture as an example.

import { test, expect } from '@playwright/test';

test('basic test', async ({ page }) => {

// { page } represents a browser page object provided by Playwright

// page.goto(url)

// page.reload()

// page.goBack()

// ..

});

Playwright automatically initializes a page fixture for each test, providing a Page object that can be used within the test. The fixture is created before the test starts and disposed of after it is completed.

Determine how to handle flaky tests

Flaky tests produce inconsistent results despite no apparent changes to the code or environment. They can be frustrating and time-consuming to deal with. Assess the testing environment for factors contributing to flakiness, such as network instability, resource contention, or platform-specific issues.

Test retries provide an automatic mechanism to address flaky tests. You can configure test retries in the configuration file. Playwright Test executes tests in separate worker processes—independent operating system processes managed by the test runner. Each worker operates in an identical environment and launches its own browser instance.

You can configure retries directly in the command line using the --retries flag (npx playwright test --retries=3) or through the Playwright config file.

When a test consistently fails, even after retries, it's essential to investigate the underlying cause instead of just retrying it. Otherwise, over-relying on retries masks the issue instead of solving it and can complicate things later.

Playwright’s built-in debugger lets you review your tests, inspect variables, and set breakpoints. Simply add the --debug flag to the run command to use it. The debugger allows you to step through the code and examine the values of variables at each step, allowing you to pinpoint the exact location where the test fails (and why).

Create a test setup and teardown strategy

When you're setting up your tests, you usually need some code to run before all your tests start (called "global setup") and some code to run after they're done (called "global teardown"). There are two main ways to do this.

Global dependencies

Think of project dependencies as a way to create a task chain. You set up one project that runs before others and handles all the global setup tasks. This method is recommended because it provides more clarity and better tracking of what happens during your test setup. It makes your testing process smoother and more organized.

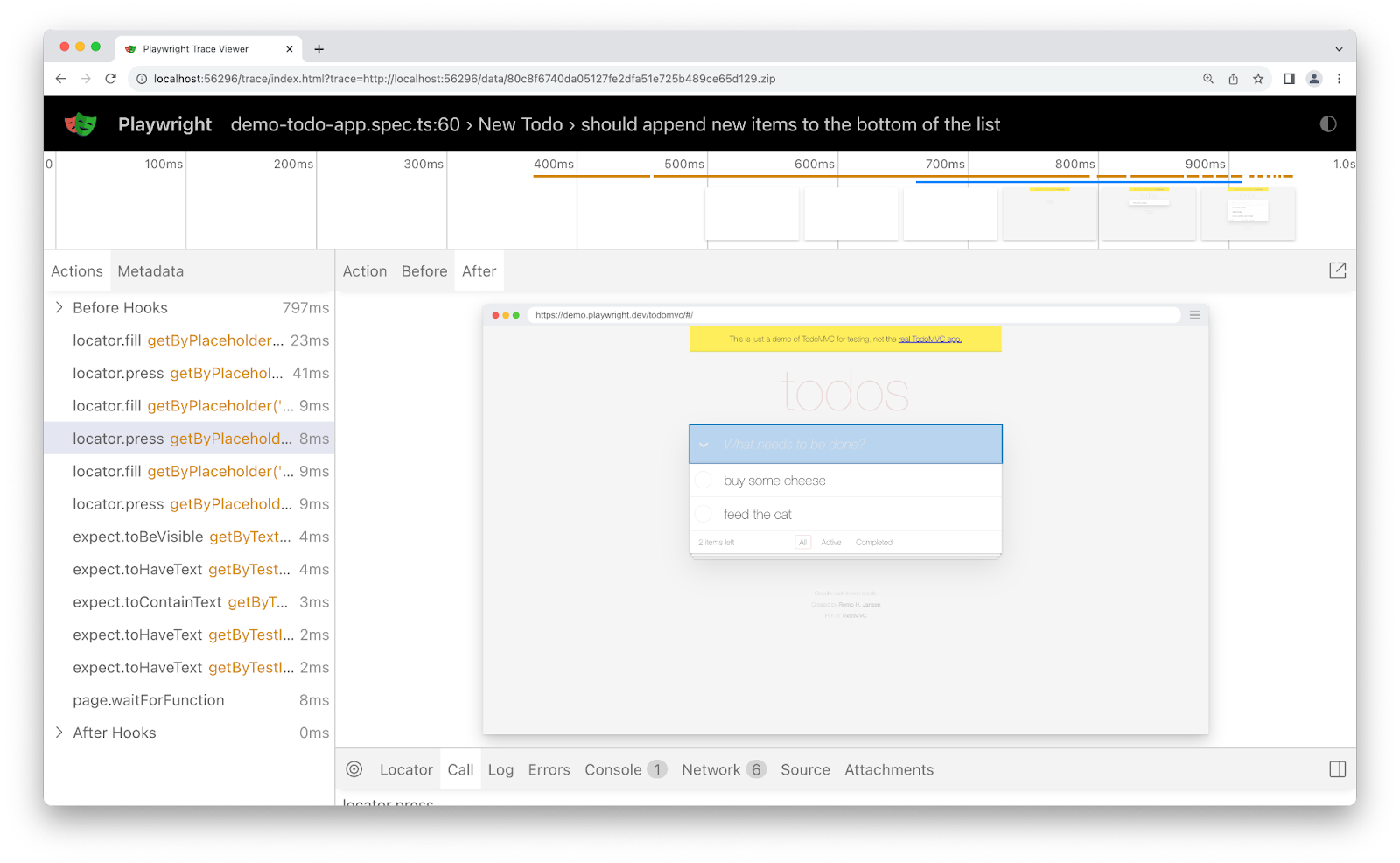

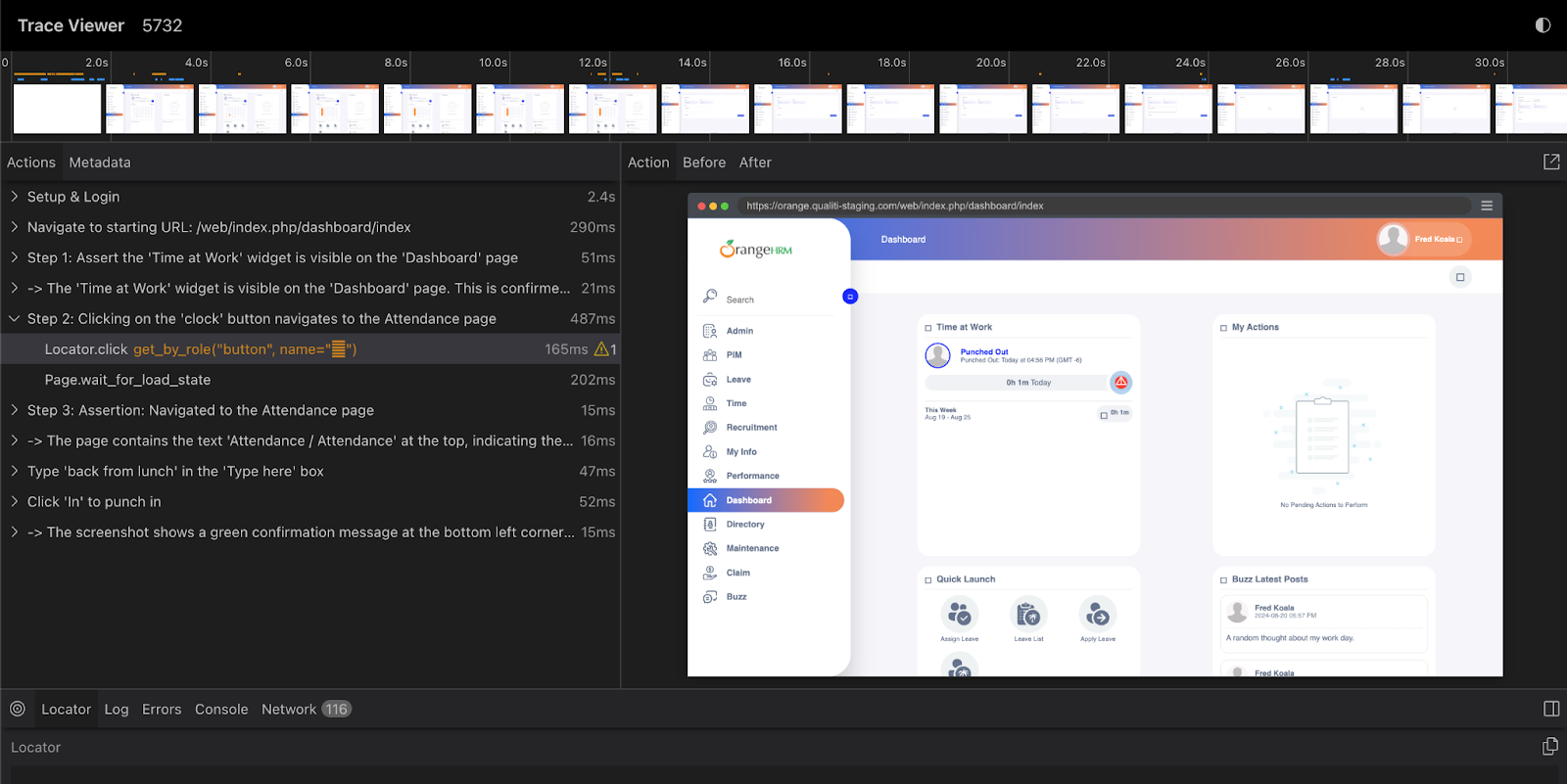

One benefit of setting up global dependencies is using Playwright Trace Viewer to check the setup process. The Trace Viewer records (or “traces”) your failed tests and CI and includes them in the report, making debugging easier and quicker.

Global setup file

Global setup and teardown files are useful for preparing or cleaning up the environment for all tests, especially when dealing with shared resources like databases or test servers. The testing framework automatically runs the code in the setup file before the test run. Similarly, you can have a global teardown file to clean up afterward. Setting it up in Playwright is straightforward—just point to the file.

import { defineConfig } from '@playwright/test';

export default defineConfig({

globalSetup: require.resolve('./global-setup'),

globalTeardown: require.resolve('./global-teardown'),

});

However, your test reports may not provide as much detail about what happened during the setup, and you (usually) won’t have the detailed tracing of actions that you would get with project dependencies. A global setup file is a good choice for simpler projects or when you have a straightforward setup that doesn’t require the extra features that come with project dependencies.

{{banner-small-3="/banners"}}

Parallelism

Simultaneously running multiple tests improves efficiency, enhances resource utilization, and achieves faster feedback on code changes. Playwright test files run in parallel by default, with tests within a single file running sequentially within the same worker process. You can turn off parallel test execution by limiting the number of workers to 1 in the config file or with the --workers=1 flag.

import { defineConfig } from '@playwright/test';

export default defineConfig({

// Limit the number of workers on CI, use default locally

workers: process.env.CI ? 2 : undefined,

});

Note that parallel testing depends on your project's specific circumstances. While it offers significant benefits, consider potential challenges.

- If some tests rely on the output of other tests, you need to ensure a proper execution order.

- If multiple tests interact with the same resources (e.g., databases, files), you might need to implement synchronization mechanisms to avoid conflicts.

Qualiti supports Playwright’s parallel testing capabilities and takes this further - automatically mitigating flaky tests, selector issues, and false failures between test suite runs.

Plan for automation-resistant UI elements

Some elements often present challenges for automated testing due to their dynamic nature, complexity, or reliance on human interaction. Let’s look at some examples and mitigation methods.

Bot detection

Websites often employ bot detection mechanisms (CAPTCHA, volume analysis, HTTP fingerprinting, IP blacklisting, or even on user behavior analysis) to prevent automated scripts from accessing or manipulating their content. These mechanisms can interfere with automated testing tools.

Running tests closely mimicking human behavior, such as incorporating realistic mouse movements, varying interaction timing, and avoiding repetitive patterns, avoids triggering these mechanisms. Another common mitigation method is running tests in headless mode.

Headless mode is a browser operating mode that runs without rendering a visible user interface on the screen. It can be controlled programmatically, reduces resource usage, improves testing speed, and avoids triggering these mechanisms.

Dynamic content

Ads and cookie consent banners hinder automated testing due to their dynamic nature and user interaction requirements. To eliminate ads, employ ad-blocking tools or extensions and utilize cookies as fixtures to bypass cookie consent banners during testing.

Other dynamic content types, such as in-game purchases and geolocation elements (customized features based on device location), may have to be included in Playwright test automation. These introduce complexity to the testing process. For example, you may have to simulate in-game purchases using appropriate tools and libraries or mock geolocation data to test your application's behavior in different regions.

Incorporate CI/CD into development workflows.

CI automatically integrates code changes from multiple contributors into a shared repository several times daily. This process includes running automated tests to ensure new changes do not introduce bugs. CD builds upon CI by automatically deploying code changes to production (Continuous Deployment) or preparing them for release (Continuous Delivery). Incorporating automated testing into a CI/CD pipeline means a suite of automated tests is triggered after every code change to validate the code. Deploying smaller, incremental updates rather than large releases minimizes the risk of introducing critical bugs.

Consistent environment configuration

Ensure that all environments (development, testing, staging, production) are consistently configured to avoid environment-specific issues. Many cloud-based CI/CD services, such as CircleCI, Jenkins, and Travis CI, offer built-in support for Playwright testing and help manage environment configuration. You can do this by breaking down your testing process into stages and configuring jobs, setting up any environment variables you may need, and configuring Playwright in the CI environment.

Environment isolation

Environment isolation is the technique of mocking, disabling, or otherwise preventing the use of external network requests from within a test environment. This is critical to consider—unintended API usage can lead to unexpected costs and trigger resource quotas. Use isolated environments to make it easier to manage dependencies and configurations. This can be achieved using virtual machines, containers, or sandboxes.

Monorepo vs. Polyrepo

Decide whether to use a single repository for all services (monorepo) or multiple repositories (polyrepo). Each approach has benefits and trade-offs regarding complexity, versioning, and inter-service communication.

Implement strategies to manage inter-repo dependencies effectively, ensuring that changes in one repository do not break others. Implement strict access controls and review processes for deployment to sensitive environments.

Qualiti offers multiple CI integrations to integrate your test plans. It supports any CI runner with a UNIX-like environment, Bash shell, and cURL. This includes GitHub, GitLab, Circle, Semaphore, Jenkins, etc. Native CI tools will also be added soon.

Lint your tests

Linting is the process of statically analyzing code to identify potential errors, stylistic issues, and anti-patterns. In the context of Playwright test automation, linting can help ensure that your tests are well-structured, maintainable, and free from common mistakes. Use TypeScript and ESLint with Playwright test automation to catch errors early (such as missing awaits before the asynchronous calls to the Playwright API).

Consider the example below.

// tests/example.spec.ts

import { test, expect } from '@playwright/test';

test('example test', async ({ page }) => {

await page.goto('https://app.qualiti.ai/auth/login');

// Unhandled promise: This will cause a runtime error

page.waitForSelector('element-that-does-not-exist');

await expect(page).toHaveTitle('Example Page');

});

Without linting, the unhandled promise in the test case would only be detected at runtime, leading to a failed test and requiring you to fix the issue and re-run the tests. Linting prevents this by identifying such errors before execution, increasing the chances of passing tests. When integrated into your CI/CD pipeline, linting adds another layer of quality control. Whenever a code change is pushed, the CI pipeline runs linters first to catch common errors and code-quality issues early.

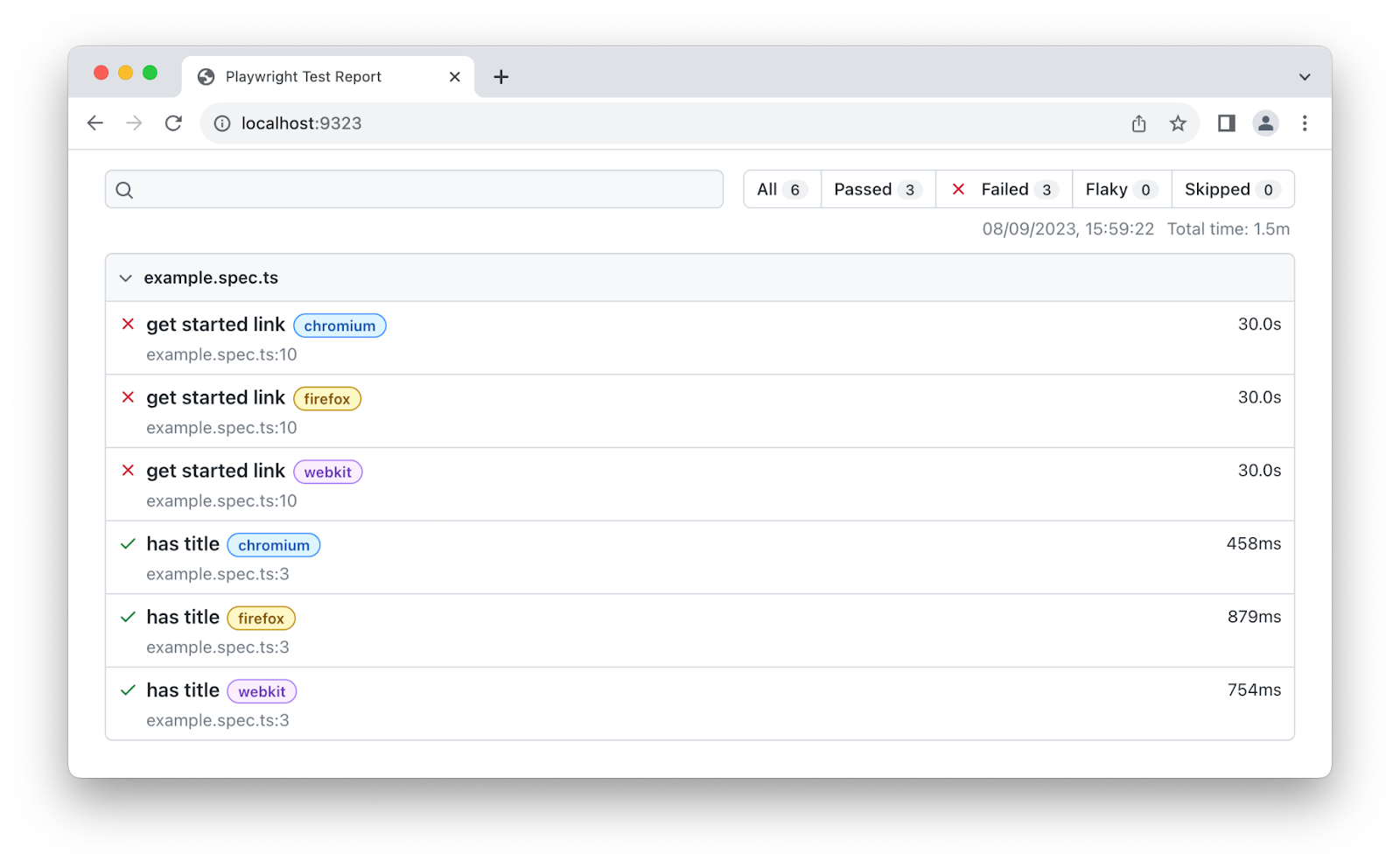

Use reporting and monitoring in your automation workflows

Reporters are tools that generate output for test results, helping you understand how your tests performed. They provide insights into passed and failed tests, execution times, and other relevant metrics. Playwright has the following built-in reporters

List reporter

The List reporter displays a line for each test run, showing detailed information about failures. It is enabled by default (except in CI, where the dot reporter is the default). Below is an example output in the middle of a test run.

npx playwright test --reporter=list

Running 124 tests using 6 workers

1 ✓ should access error in env (438ms)

2 ✓ handle long test names (515ms)

3 x 1) render expected (691ms)

4 ✓ should timeout (932ms)

5 should repeat each:

6 ✓ should respect enclosing .gitignore (569ms)

7 should teardown env after timeout:

8 should respect excluded tests:

9 ✓ should handle env beforeEach error (638ms)

10 should respect enclosing .gitignore:

Line reporter

The Line reporter provides a more concise output than the list reporter, showing the last finished test and reporting failures inline. It is useful for large test suites where it shows the progress but does not spam the output by listing all the tests.

npx playwright test --reporter=line

Running 124 tests using 6 workers

1) dot-reporter.spec.ts:20:1 > render expected ===================================================

Error: expect(received).toBe(expected) // Object.is equality

Expected: 1

Received: 0

[23/124] gitignore.spec.ts - should respect nested .gitignore

Dot reporter

The Dot reporter is very concise, displaying a single character per successful test. It’s the default on CI—where you may not want a lot of output.

npx playwright test --reporter=dot

Running 124 tests using 6 workers

······F·············································

HTML reporter

The HTML reporter generates a self-contained HTML report that can be served as a web page. By default, an HTML report is opened automatically when certain tests fail. This can be changed via the open property in the Playwright config or the PLAYWRIGHT_HTML_OPEN environment variable.

Note that the report is written in the playwright-report folder in the current working directory; however, opening it locally will not work as expected. Refer to Playwright’s documentation for more details.

Blob reporter

Captures all details about the test run for later use. It is particularly useful for merging reports from sharded tests. By default, the report is written into the blob-report directory in the current working directory.

JSON reporter

Outputs a comprehensive object with test run information. However, make sure to write it to a file using the PLAYWRIGHT_JSON_OUTPUT_NAME environment variable:

PLAYWRIGHT_JSON_OUTPUT_NAME=results.json npx playwright test --reporter=json

JSON report example.

{

"results": {

"tool": {

"name": "playwright"

},

"summary": {

"tests": 1,

"passed": 1,

"failed": 0,

"pending": 0,

"skipped": 0,

"other": 0,

"start": 1706828654274,

"stop": 1706828655782

},

"tests": [

{

"name": "dummy test",

"status": "passed",

"duration": 100

}

],

}

}

A more detailed report can also be generated, including screenshots, tags, failure messages, etc.

JUnit reporter

Produces a JUnit-style XML report. You’ll need to output this to an XML file. Similar to the above, use the PLAYWRIGHT_JUNIT_OUTPUT_NAME environment variable.

Custom reporter

There is a possibility that the built-in Playwright test automation reporters won’t meet your specific requirements. What if you want to integrate test results with tools or systems like issue-tracking software, analytics platforms, or a CI/CD pipeline? A custom reporter facilitates this integration by providing data in a suitable format.

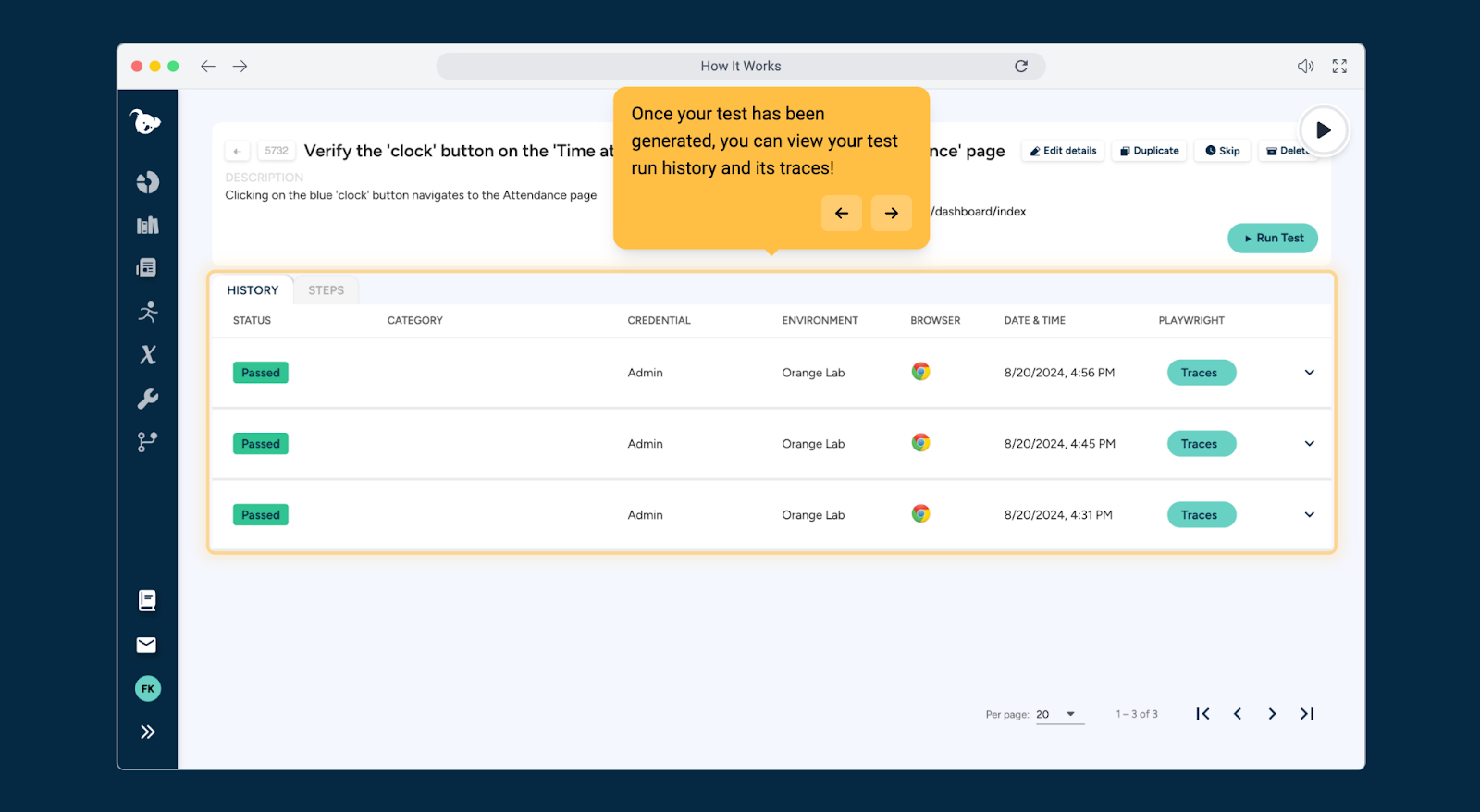

Using Qualiti to automate testing

Qualiti simplifies test maintenance by automatically updating test suites whenever code changes. Developers avoid manually adjusting tests for each update, saving time and ensuring testing stays in sync with development.

With its AI tools, Qualiti creates complete test suites in minutes, analyzing the content on each page to generate detailed tests. This approach provides robust coverage without requiring extensive coding. Teams can launch new test suites quickly, even for complex applications.

Technical and non-technical personnel alike can review results in the History panel.

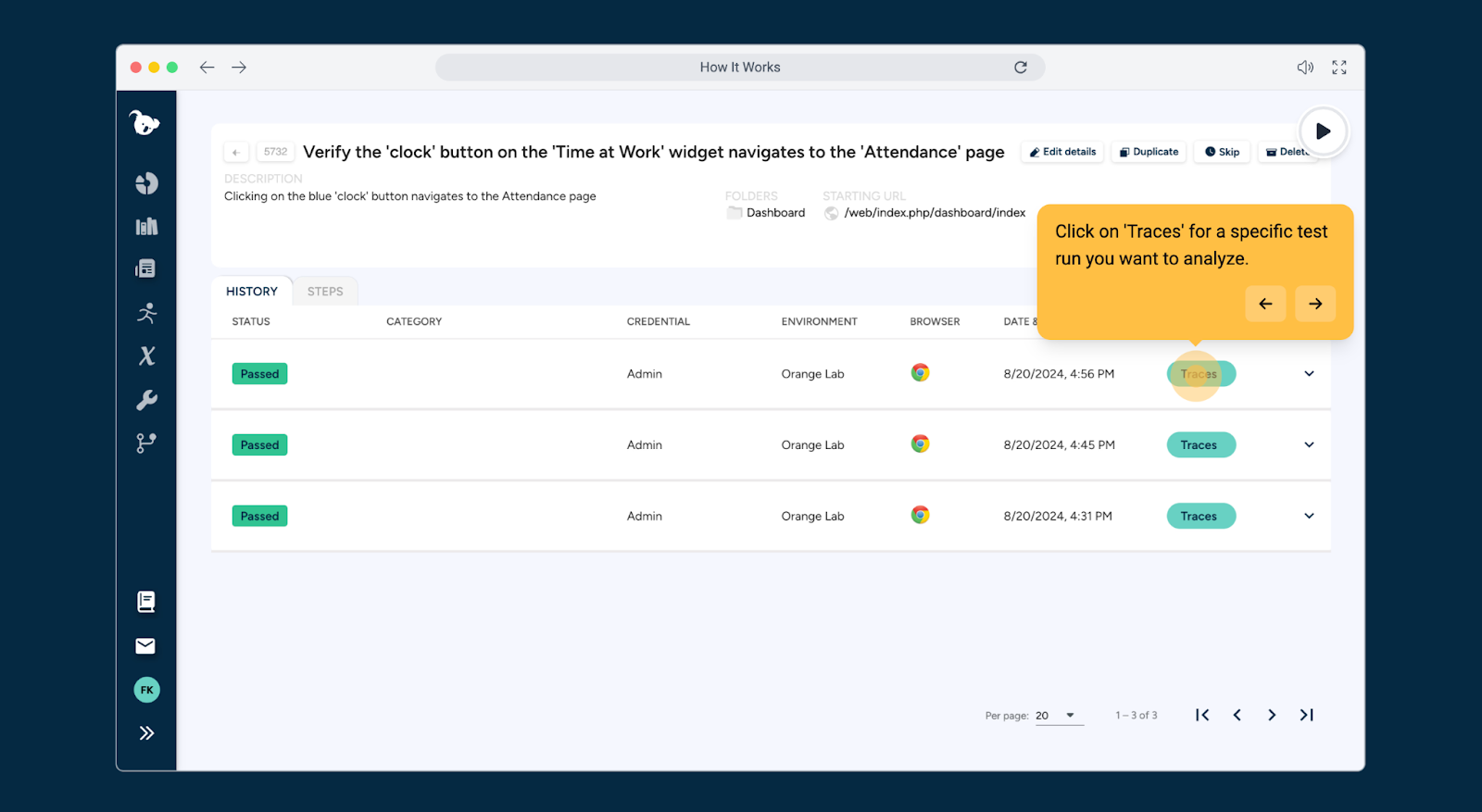

In the UI, engineers can review failed test traces to determine the code paths a test took.

The Trace Viewer provides full logs, screenshots, and test code traces. Developers can view live test logs, error reports, and console output.

The low-code interface allows anyone on the team—not just developers—to create tests, making testing more collaborative and efficient. Non-technical users can add tests using plain English, making testing a shared effort across the organization.

{{banner-small-4="/banners"}}

Last thoughts

While automated tests provide significant benefits, be mindful that if the setup time exceeds the time saved during execution, it may not be worth the effort. Qualiti builds, runs, and maintains test environments for you. Using code or plain language, you can build an average test suite on the platform in less than an hour. It assesses necessary test groups, cases, and steps based on application context while letting you add or modify any test manually.

By applying the best practices outlined in this article and leveraging the power of Playwright test automation combined with Qualiti, organizations can reap the benefits of effective test automation and deliver higher-quality software.